The selectObjectContent API allows to easily query JSON and NDJSON data from S3. Python read_s3.py # will execute the python script to read from s3 Query JSON and NDJSON files on Amazon S3.

#S3 json query code

# execute the python file containing your code as stated above that reads from s3 cli-input-json This is a JSON structure that provides additional.

You can think this as a limited version of Amazon Athena, which allows you. Let's prepare a shell script called read_s3_using_env.sh for setting the environment variables and add our python script ( read_s3.py) there as follows: # read_s3_using_env.shĮxport MY_AWS_KEY_ID='YOUR_AWS_ACCESS_KEY_ID'Įxport MY_AWS_SECRET_ACCESS_KEY='YOUR_AWS_SECRET_ACCESS_KEY' pay only for the queries by paying for the amount of data read from S3 per query. S3 Select provides capabilities to query a JSON, CSV or Apache Parquet file directly without downloading the file first. Look for updates and announcements about this in future AWS Security Blog posts. See the official AWS documentation for more information. the JSON object that you are querying has only one root element, the FROMclause must begin with S3Object. This node module provides a wrapper for this method, exposing the data as an aggregated result as a Promise. Amazon S3 Select always treats a JSON document as an array of root-level values. (2) Read from your environment variable (my preferred option for deployment): from os import environĪws_access_key_id=environ,Īws_secret_access_key=environ Amazon Athena: You can query AWS CloudTrail logs in Amazon Athena, and we will be adding support for querying the TLS values in your CloudTrail logs in the coming months. The selectObjectContent API allows to easily query JSON and NDJSON data from S3.

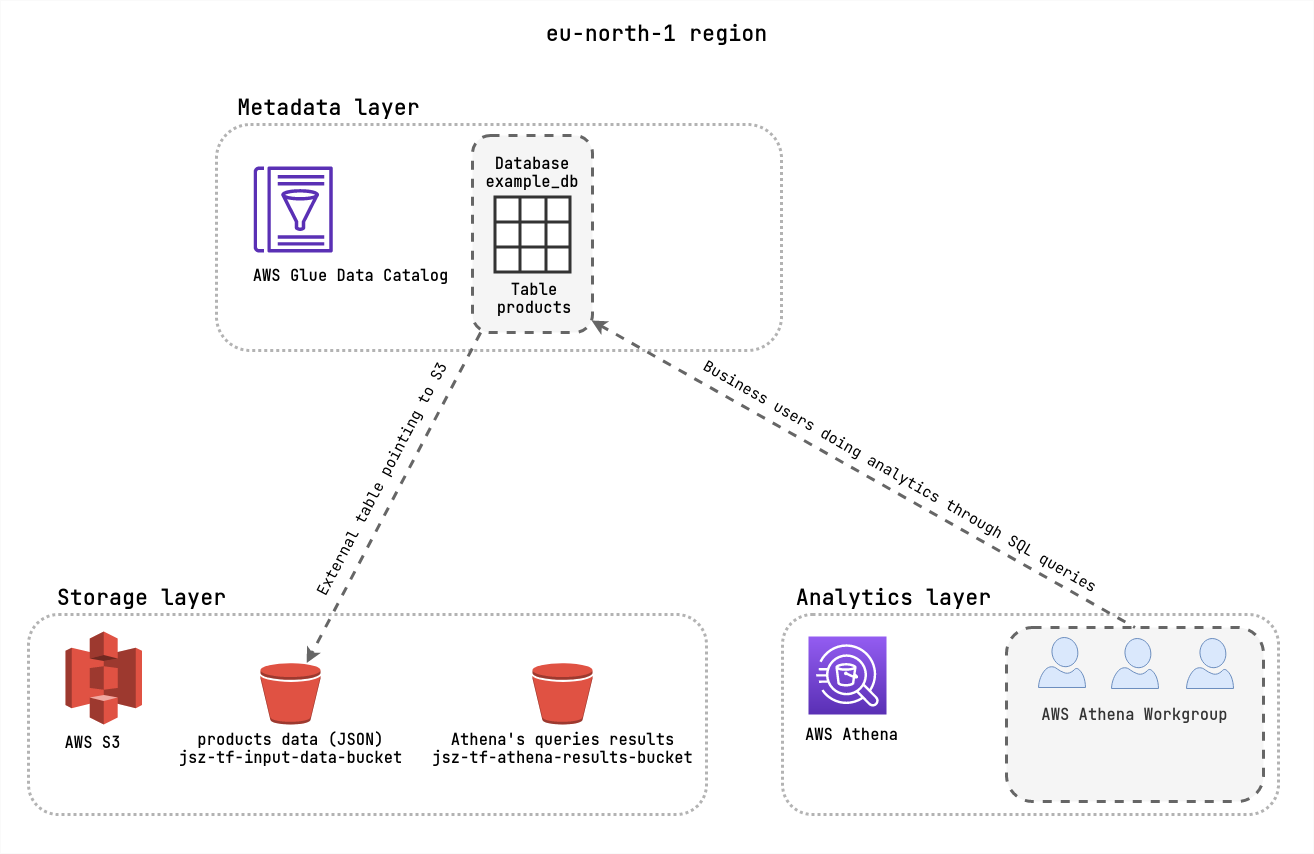

(1) Read your AWS credentials from a json file ( aws_cred.json) stored in your local storage: from json import loadĬredentials = load(open('local_fold/aws_cred.json'))Īws_access_key_id=credentials,Īws_secret_access_key=credentials For best practices, you can consider either of the followings: It works on objects stored in CSV, JSON or Apache Parquet, including compressed and large files of several TBs. It has 3 basic building blocks: AWS S3 - Persistent. It is not good idea to hard code the AWS Id & Secret Keys directly. Its serverless and built on top of Presto with ANSI SQL support and hence you can run queries using SQL. germanviscuso / example-query.js Forked from thetrevorharmon/example-query.js Created 2 years ago Star 0 Fork 0 Query JSON with S3 Select in Node.js example-query.js // See tth. i need to extract all the skill objects as to below json structure.

Let's call the above code snippet as read_s3.py. Query JSON with S3 Select in Node.js GitHub Instantly share code, notes, and snippets. thetrevorharmon / example-query.js Last active 2 days ago Star 6 Fork 3 Revisions 2 Stars Forks Query JSON with S3 Select in Node.js Raw example-query.js // See tth. Print(text) # Use your desired JSON Key for your value Query JSON with S3 Select in Node.js GitHub Instantly share code, notes, and snippets. Result = client.get_object(Bucket=BUCKET, Key=FILE_TO_READ) # read_s3.pyįILE_TO_READ = 'FOLDER_NAME/my_file.json'Īws_secret_access_key='MY_AWS_SECRET_ACCESS_KEY' Only the metadata files are JSON files.The following worked for me. Am attempting to create a table from an S3 bucket which has files structures like so: my-bucket/

0 kommentar(er)

0 kommentar(er)